I get the appeal of the “best of the best” but a few years ago I decided to only buy components and tech in general with efficiency in mind, and I’m so happy.

My RTX 4060 Ti runs everything but stays surprisingly cool for a GPU, gets by with my 500W PSU with power to spare, is stone silent, and everything fits in a nice small form factor case. My computer is silent, cool and wastes very little power. This is also how I’m choosing phones and many other tech gadgets nowadays.

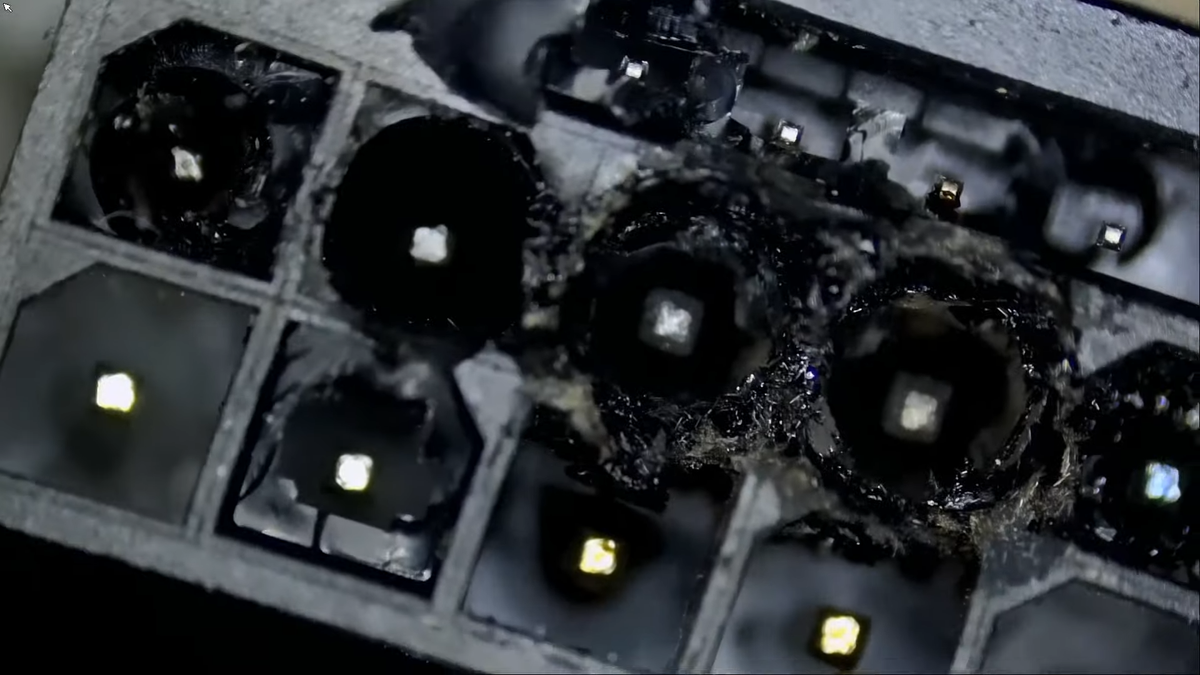

Having your product be so demanding you need to create a new connector to retrofit into old style power supplies, and then having it melt because even your own adaptor can’t handle the power, is not a good idea at all.

Not only am I with you 100% but I also by all of my hardware used.

There just aren’t very many games I want to play these days that require a graphics card that weighs more than a house cat.

Most of the games I want to play lately are indies that would run on an AMD APU…

AFAIK it can handle all the power totally fine - it’s just very easy to partially connect instead of fully.

I guess one could make the argument that if it’s so tightly within spec that minor errors can cause catastrophic failure, it can’t really handle it.

But it can also be said that this is just user error being reported as “Nvidia bad” because this farms clicks and up votes.

It’s not about how tight the spec is - it’s about how poorly designed the connector is if it is easy to partially connect.

You can have very tight specs and not be failure prone.

If a partial connection, a very common event, is not problematic with other GPUs but very problematic with this one - yes, it’s correct to affirm being so tightly within spec is a problem, as deviations in real world usage are more than expected.

It’s not that common with previous connectors though. They snapped in and without a full connection did not work.

AMD graphics cards dont melt and their drivers work on everything.

I remember when the exact opposite was true. Their drivers were awful and their GPUs consistently overheated.

I am glad to see things change. Competition is always a good thing.

I mean all beginnings are difficult. But yeah i remember that as well, was very unpleasant back then but things improved a lot, especially since they made their stuff open source.

I felt the same way until I had a ROG strix RX-2700xt. I started getting fatal directx errors playing FFXIV all the time. It was an allocation problem and there was no driver version I could try that fixed it. I started trying to learn to custom-patch a driver, gave up, and bought an Nvidia card which I hated to do. Fixed the problem. Turns out the drivers for that specific card suck in general.

I still prefer AMD, but I’m wary of card manufacturers. Their drivers can be awful. In this case though, the default drivers didn’t work either. And you generally won’t know the word on the street until well after the cards aren’t higher end.

Anecdotal for sure, but it took a year of fighting on and off to fix and I don’t want that when I’m trying to relax.

I had that card as well, never had a single problem. But TBH im a Linux user and the direct X shit is a general problem from time to time, its fixable but annoying when it happens. So the possibility of me not noticing it being a graphics card problem.

Direct x is definitely a problem, and it doesn’t help that Final Fantasy 14 is a poorly coded game, as I’ve never had problems prior to that. And I’m running Windows 10. I used to run Linux years ago, but couldn’t use Netflix on it. Now that they’re crap and jellyfin is a better choice overall, I might have to try switching back. I’d greatly prefer to use all AMD, but we’ll see. I think the upcoming W11 upgrade-or-die ultimatum in October 2025 will force lazy people like myself to spend the time to switch or rebuy. My work environment that I support is Windows/Cisco/Fortinet, so it’s easier to come home and do the same rather than learn how to install/configure/support Linux versions of the same thing. But who knows what the next year will bring.

Less stingy with the Vram as well

It’s the 12VHPWR connector that’s melting. The problem is that it is much smaller than the connector it replaces, while also sending much more power. Without very careful engineering of the design, something like this was inevitable.

With the attack of the 5090 everything will come in order.

i have not liked nvidia since they took over 3dfx, but you cant tell me they had no clue this was going to happen, unless it’s all a part of their master plan to melt pc’s, forcing us to buy all new ones!?!